BUYING A HOUSE

A friend walked into a bank in a small town in Connecticut. As frequently portrayed in movies, the benefit of living in a small town is that you see many people that you know around town and often have a first name relationship with local merchants. It’s very personal and something that many equate to the New England charm of a town like New Canaan. As this friend, let us call him Dan, entered the bank, it was the normal greetings by name, discussion of the recent town fair, and a brief reflection on the weekend’s Little League games.

Dan was in the market for a home. Having lived in the town for over ten years, he wanted to upsize a bit, given that his family was now 20-percent larger than when he bought the original home. After a few months of monitoring the real estate listings, working with a local agent (whom he knew from his first home purchase), Dan and his wife settled on the ideal house for their next home. Dan’s trip to the bank was all business, as he needed a mortgage (much smaller than the one on his original home) to finance the purchase of the new home.

The interaction started as you may expect: “Dan, we need you to fill out some paperwork for us and we’ll be able to help you.” Dan proceeded to write down everything that the bank already knew about him: his name, address, Social Security number, date of birth, employment history, previous mortgage experience, income level, and estimated net worth. There was nothing unusual about the questions except for the fact that the bank

already knew everything they were asking about.

After he finished the paperwork, it shifted to an interview, and the bank representative began to ask some qualitative questions about Dan’s situation and needs, and the mortgage type that he was looking for. The ever-increasing number of choices varied based on fixed versus variable interest rate, duration and amount of the loan, and other factors.

Approximately 60 minutes later, Dan exited the bank, uncertain of whether or not he would receive the loan. The bank knew Dan. The bank employees knew his wife and children by name, and they had seen all of his deposits and withdrawals over a ten-year period. They’d seen him make all of his mortgage payments on time.

Yet the bank refused to acknowledge, through their actions, that they actually knew him.

BRIEF HISTORY OF CUSTOMER SERVICE

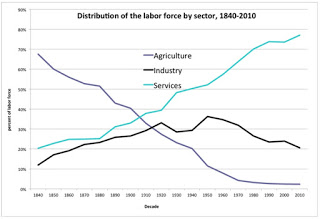

There was an era when customer support and service was dictated by what you told the person in front of you, whether that person was a storeowner, lender, or even an automotive dealer. It was then up to that person to make a judgment on your issue and either fix it or explain why it could not be fixed. That simpler time created a higher level of personal touch in the process, but then the telephone came along. The phone led to the emergence of call centers, which led to phone tree technology, which resulted in the decline in customer service.

CUSTOMER SERVICE OVER TIME

While technology has advanced exponentially since the 1800s, customer experience has not advanced as dramatically. While customer interaction has been streamlined and automated in many cases, it is debatable whether or not those cost-focused activities have engendered customer loyalty, which should be the ultimate goal.

The following list identifies the main historical influences on customer service. Each era has seen technological advances and along with that, enhanced interaction with customers.

Pre-1870: In this era, customer interaction was a face-to-face experience. If a customer had an issue, he would go directly to the merchant and explain the situation. While this is not scientific, it seems that overall customer satisfaction was higher in this era than others for the simple fact that people treat a person in front of them with more care and attention than they would a person once or twice removed.

1876: The telephone is invented. While the telephone did not replace the face-to-face era immediately, it laid the groundwork for a revolution that would continue until the next major revolution: the Internet.

1890s: The telephone switchboard was invented. Originally, phones worked only point-to-point, which is why phones were sold in pairs. The invention of the switchboard opened up the ability to communicate one-to-many. This meant that customers could dial a switchboard and then be directly connected to the merchant they purchased from or to their local bank.

1960s: Call centers first emerged in the 1960s, primarily a product of larger companies that saw a need to centralize a function to manage and solve customer inquiries. This was more cost effective than previous approaches, and perhaps more importantly, it enabled a company to train specialists to handle customer calls in a consistent manner. Touch-tone dialing (1963) and 1-800 numbers (1967) fed the productivity and usage of call centers.

1970s: Interactive Voice Response (IVR) technology was introduced into call centers to assist with routing and to o er the promise of better problem resolution. Technology for call routing and phone trees improved into the 1980s, but it is not something that ever engendered a positive experience.

1980s: For the first time, companies began to outsource the call-center function. The belief was that if you could pay someone else to offer this service and it would get done at a lower price, then it was better. While this did not pick up steam until the 1990s, this era marked the first big move to outsourcing, and particularly outsourcing overseas, to lower- cost locations.

1990s to present: This era, marked by the emergence of the Internet, has seen the most dramatic technology innovation, yet it’s debatable whether or not customer experience has improved at a comparable pace. e Internet brought help desks, live chat support, social media support, and the widespread use of customer relationship management (CRM) and call-center software.

Despite all of this progress and developing technology through the years, it still seems like something is missing. Even the personal, face-to-face channel (think about Dan and his local bank) is unable to appropriately service a customer that the employees know (but pretend not to, when it comes to making business decisions)

.

While we have seen considerable progress in customer support since the 1800s, the lack of data in those times prevented the intimate customer experience that many longed for. It’s educational to explore a couple pre-data era examples of customer service, to understand the strengths and limitations of customer service prior to the data era.

BOEING

The United States entered World War I on April 6, 1917. The U.S. Navy quickly became interested in Boeing’s Model C seaplane. e seaplane was the rst “all-Boeing” design and utilized either single or dual pontoons for water landing. e seaplane promised agility and exibility, which were features that the Navy felt would be critical to managing the highly complex environment of a war zone. Since Boeing conducted all of the testing of the seaplane in Pensacola, Florida, this forced the company to deconstruct the planes, ship them to the west coast of the United States (by rail). en, in the process, they had to decide whether or not to send an engineer and pilot, along with the spare parts, in order to ensure the customer’s success. This is the pinnacle of customer service: knowing your customers, responding to their needs, and delivering what is required, where it is required. Said another way, the purchase (or prospect of purchase) of the product assumed customer service.

The Boeing Company and the Douglas Aircraft Company, which would later merge, led the country in airplane innovation. As Boeing expanded after the war years, the business grew to include much more than just manufacturing, with the advent of airmail contracts and a commercial ight operation known as United Air Lines. Each of these expansions led to more opportunities, namely around a training school, to provide United Air Lines an endless supply of skilled pilots.

In 1936, Boeing founded its Service Unit. As you might expect, the first head of the unit was an engineer (Wellwood Beall). After all, the mission of the unit was expertise, so a top engineer was the right person for the job. As Boeing expanded overseas, Beall decided he needed to establish a division focused on airplane maintenance and training the Chinese, as China had emerged as a top growth area.

When World War II came along, Boeing quickly dedicated resources to training, spare parts, and maintaining fleets in the conflict. A steady stream of Boeing and Douglas field representatives began flowing to battlefronts on several continents to support their companies’ respective aircraft. Boeing put field representatives on the front lines to ensure that planes were operating and, equally importantly, to share information with the company engineers regarding needed design improvement.

Based on lessons learned from its rst seven years in operation, the service unit reorganized in 1943, around four areas:

-Maintenance publications

-Field service

-Training

-Spare parts

To this day, that structure is still substantially intact. Part of Boeing’s secret was a tight relationship between customer service technicians and the design engineers. This ensured that the Boeing product-development team was focused on the things that mattered most to their clients.

Despite the major changes in airplane technology over the years, the customer-support mission of Boeing has not wavered: “To assist the operators of Boeing planes to the greatest possible extent, delivering total satisfaction and lifetime support.” While customer service and the related technology has changed dramatically through the years, the attributes of great customer service remains unchanged. We see many of these attributes in the Boeing example:

1. Publications: Sharing information, in the form of publications available to the customer base, allows customers to “help themselves.”

2. Teamwork: The linkage between customer support and product development is critical to ensuring client satisfaction over a long period of time.

3. Training: Similar to the goal with publications, training makes your clients smarter, and therefore, they are less likely to have issues with the products or services provided.

4. Field service: Be where your clients are, helping them as it’s needed.

5. Spare parts: If applicable, provide extra capabilities or parts needed to achieve the desired experience in the field.

6. Multi-channel: Establishing multiple channels enables the customer to ask for and receive assistance.

7. Service extension: Be prepared to extend service to areas previously unplanned for. In the case of Boeing, this was new geographies (China) and at unanticipated time durations (supporting spare parts for longer than expected).

8. Personalization: Know your customer and their needs, and personalize their interaction and engagement.

Successful customer service entails each of these aspects in some capacity. The varied forms of customer service depend largely on the industry and product, but also the role that data can play.

FINANCIAL SERVICES

There are a multitude of reasons why a financial services firm would want to invest in a call center: lower costs and consolidation; improved customer service, cross-selling, and extended geographical reach.

Financial services have a unique need for call centers and expertise in customer service, given that customer relationships are ultimately what they sell (the money is just a vehicle towards achieving the customer relationship). Six of the most prominent areas of financial services for call centers are:

1) Retail banking: Supporting savings and checking accounts, along with multiple channels (online, branch, ATM, etc.)

2) Retail brokerage: Advising and supporting clients on securities purchases, funds transfer, asset allocation, etc.

3) Credit cards: Managing credit card balances, including disputes, limits, and payments

4) Insurance: Claims underwriting and processing, and related status inquiries

5) Lending: Advising and supporting clients on securities purchases, funds transfer, asset allocation, etc.

6) Consumer lending: A secured or unsecured loan with fixed terms issued by a bank or financing company. is includes mortgages, automobile loans, etc.

Consumer lending is perhaps the most interesting financial services area to explore from the perspective of big data, as it involves more than just responding to customer inquiries. It involves the decision to lend in the first place, which sets off all future interactions with the consumer.

There are many types of lending that fall into the domain of consumer lending, including credit cards, home equity loans, mortgages, and financing for cars, appliances, and boats, among many other possible items, many of which are deemed to have a finite life.

Consumer lending can be secured or unsecured. This is largely determined by the feasibility of securing the loan (it’s easy to secure an auto loan with the auto, but it’s not so easy to secure credit card loans without a tangible asset), as well as the parties’ risk tolerance and specific objectives about the interest rate and the total cost of the loan. Unsecured loans obviously will tend to have higher returns (and risk) for the lender.

Ultimately, from the lender’s perspective, the decision to lend or not to lend will be based on the lender’s belief that she will get paid back, with the appropriate amount of interest.

A consumer-lending operation, and the customer service that would be required to manage the relationships, is extensive. Setting it up requires the consideration of many factors:

Call volumes: Forecasting monthly, weekly, and hourly engagement Sta ng: Calibrating on a monthly, weekly, and hourly basis, likely based on expected call volumes

Performance management: Setting standards for performance with the staff, knowing that many situations will be unique

Location: Deciding on a physical or virtual customer service operation, knowing that this decision impacts culture, cost, and performance

A survey of call center operations from 1997, conducted by Holliday, showed that 64 percent of the responding banks expected increased sales and cross sales, while only 48 percent saw an actual increase. Of the responding banks, 71 percent expected the call center to increase customer retention; however, only 53 percent said that it actually did.

The current approach to utilizing call centers is not working and ironically, has not changed much since 1997.

THE DATA ERA

Data will transform customer service, as data can be the key ingredient in each of the aspects of successful customer service. The lack of data or lack of use of data is preventing the personalization of customer service, which is the reason that it is not meeting expectations.

In the report, titled “Navigate the Future Of Customer Service” (Forrester, 2012), Kate Leggett highlights key areas that depend on the successful utilization of big data. These include: auditing the customer service ecosystem (technologies and processes supported across different communication channels); using surveys to better understand the needs of customers; and incorporating feedback loops by measuring the success of customer service interactions against cost and satisfaction goals.

AN AUTOMOBILE MANUFACTURER

Servicing automobiles post-sale requires a complex supply chain of information. In part, this is due to the number of parties involved. For example, a person who has an issue with his car is suddenly dependent on numerous parties to solve the problem: the service department, the dealership, the manufacturer, and the parts supplier (if applicable). That is four relatively independent parties, all trying to solve the problem, and typically pointing to someone else as being the cause of the issue.

This situation can be defined as a data problem. More specifically, the fact that each party had their own view of the problem in their own systems, which were not integrated, contributed to the issue. As any one party went to look for similar issues (i.e. queried the data), they received back only a limited view of the data available.

A logical solution to this problem is to enable the data to be searched across all parties and data silos, and then reinterpreted into a single answer. The challenge with this approach to using data is that it is very much a pull model, meaning that the person searching for an answer has to know what question to ask. If you don’t know the cause of a problem, how can you possibly know what question to ask in order to fix it?

This problem necessitates data to be pushed from the disparate systems, based on the role of the person exploring and based on the class of the problem. Once the data is pushed to the customer service representatives, it transforms their role from question takers to solution providers. They have the data they need to immediately suggest solutions, options, or alternatives. All enabled by data.

ZENDESK

Mikkel Svane spent many years of his life implementing help-desk so ware. The complaints from that experience were etched in his mind: It’s difficult to use, it’s expensive, it does not integrate easily with other systems, and it’s very hard to install. This frustration led to the founding of Zendesk.

As of December 2013, it is widely believed that Zendesk has over 20,000 enterprise clients. Zendesk was founded in 2007, and just seven short years later, it had a large following. Why? In short, it found a way to leverage data to transform customer service.

Zendesk asserts that bad customer service costs major economies around the world $338 billion annually. Even worse, they indicate that 82 percent of Americans report having stopped doing business with a company because of poor customer service. In the same vein as Boeing in World War II, this means that customer service is no longer an element of customer satisfaction; it is perhaps the sole determinant of customer satisfaction.

A simplistic description of Zendesk would highlight the fact that it is email, tweet, phone, chat, and search data, all integrated in one place and personal- ized for the customer of the moment. Mechanically, Zendesk is creating and tracking individual customer support tickets for every interaction. The interaction can come in any form (social media, email, phone, etc.) and therefore, any channel can kick off the creation of a support ticket. As the support ticket is created, a priority level is assigned, any related history is collated and attached, and it is routed to a specific customer-support person. But, what about the people who don’t call or tweet, yet still have an issue?

Zendesk has also released a search analytics capability, which is programmed using sophisticated data modeling techniques to look for customer issues, instead of just waiting for the customer to contact the company. A key part of the founding philosophy of Zendesk was the realization that roughly 35 percent of consumers are silent users, who seek their own answers, instead of contacting customer support. On one hand, this is a great advantage for a company, as it reduces their cost of support. But it is fraught with risk of customer satisfaction issues, as a customer may decide to move to a competitor without the incumbent ever knowing they needed help.

Svane, like the executives at Boeing in the World War II era, sees customer service as a means to build relationships with customers, as opposed to a hindrance. He believes this perspective is starting to catch on more broadly. “What has happened over the last five or six years is that the notion of customer service has changed from just being this call center to something where you can create real, meaningful long-term relationships with your customers and think about it as a revenue center.”

BUYING A HOUSE (CONTINUED)

It would be very easy for Dan to receive a loan and for the bank to under- write that loan if the right data was available to make the decision. With the right data, the bank would know who he is, as well as his entire history with the bank, recent significant life changes, credit behavior, and many other factors. This data would be pushed to the bank representative as Dan walked in the door. When the representative asked, “How can I help you today?” and learned that Dan was in the market for a new home, the representative would simply say, “Let me show you what options are available to you.” Dan could make a spot decision or choose to think about it, but either way, it would be as simple as purchasing groceries. at is the power of data, transforming customer service.

This post is adapted from the book,

Big Data Revolution: What farmers, doctors, and insurance agents teach us about discovering big data patterns, Wiley, 2015. Find more on the web at http://www.bigdatarevolutionbook.com